We’ve been huge fans of the new GPT4-class large language models since the beginning. In PS Week 230511 Dr GPT4, we showed several stunning examples of how to use AI to help with medical questions.

This week we’ll check in on the progress in medical AI for personal scientists.

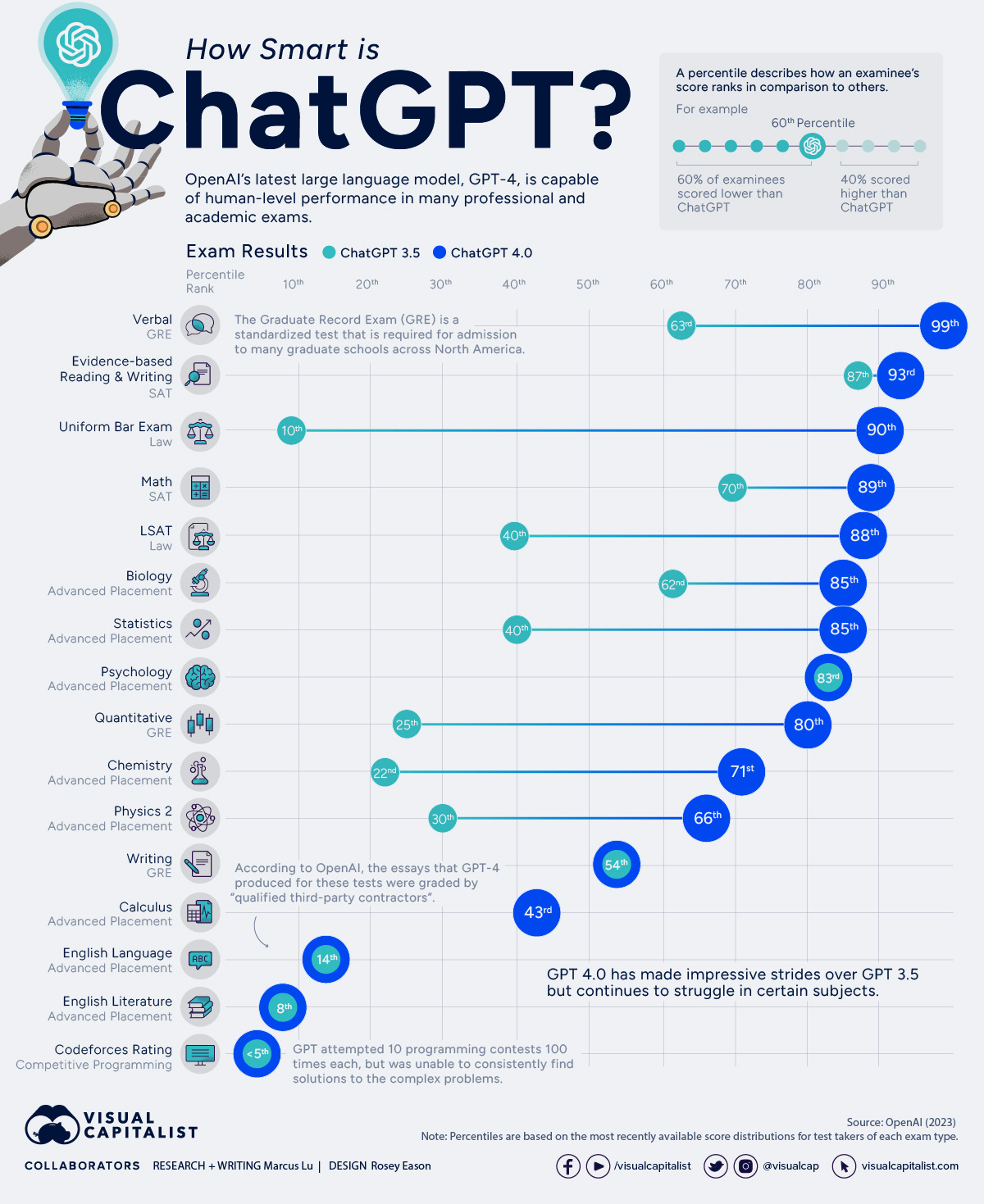

Here’s Marcus Lu’s summary of how GPT compares against standardized tests:

In a word, progress since last year has been impressive. And new applications are arriving every day.

Some AI doctors to try

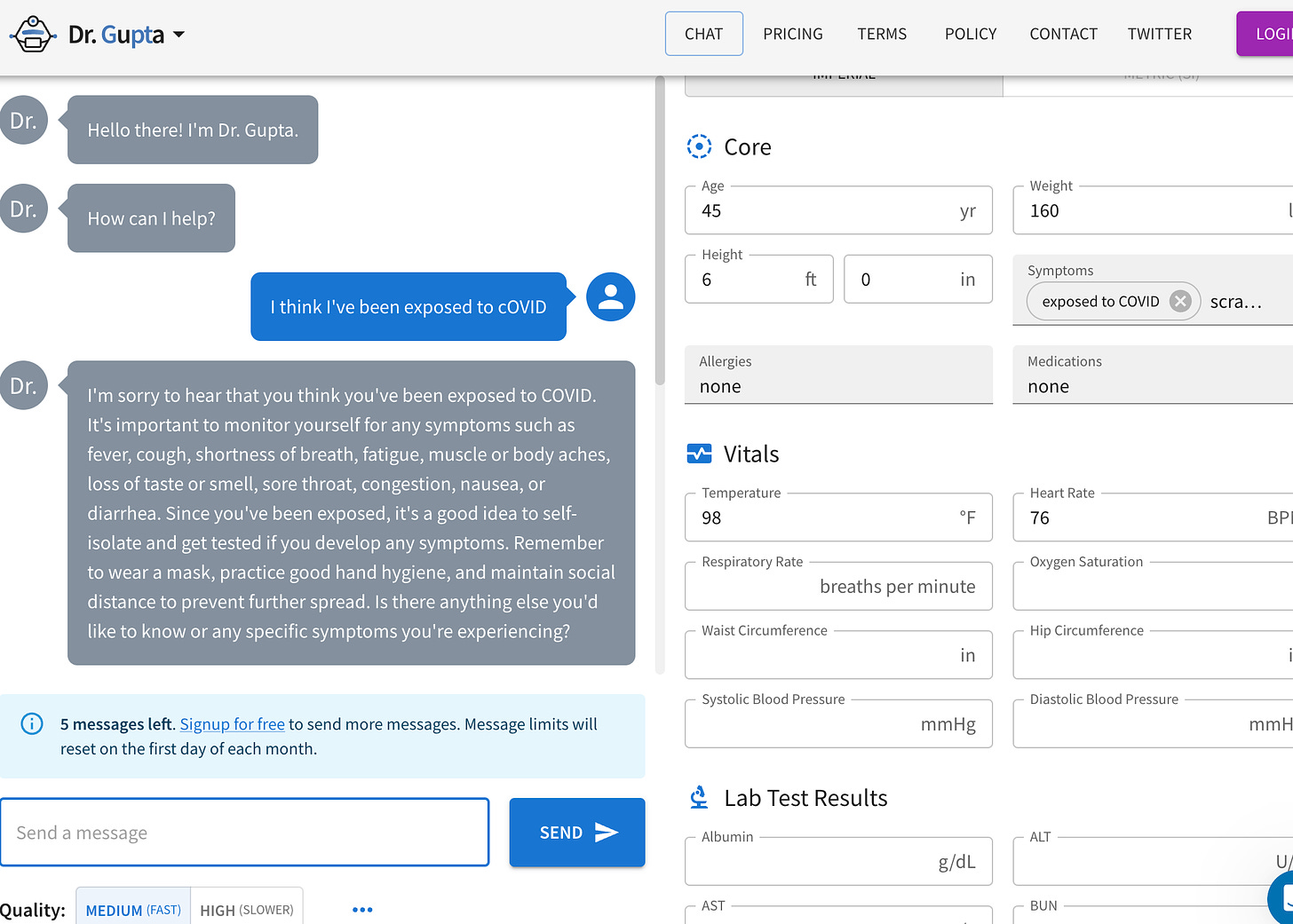

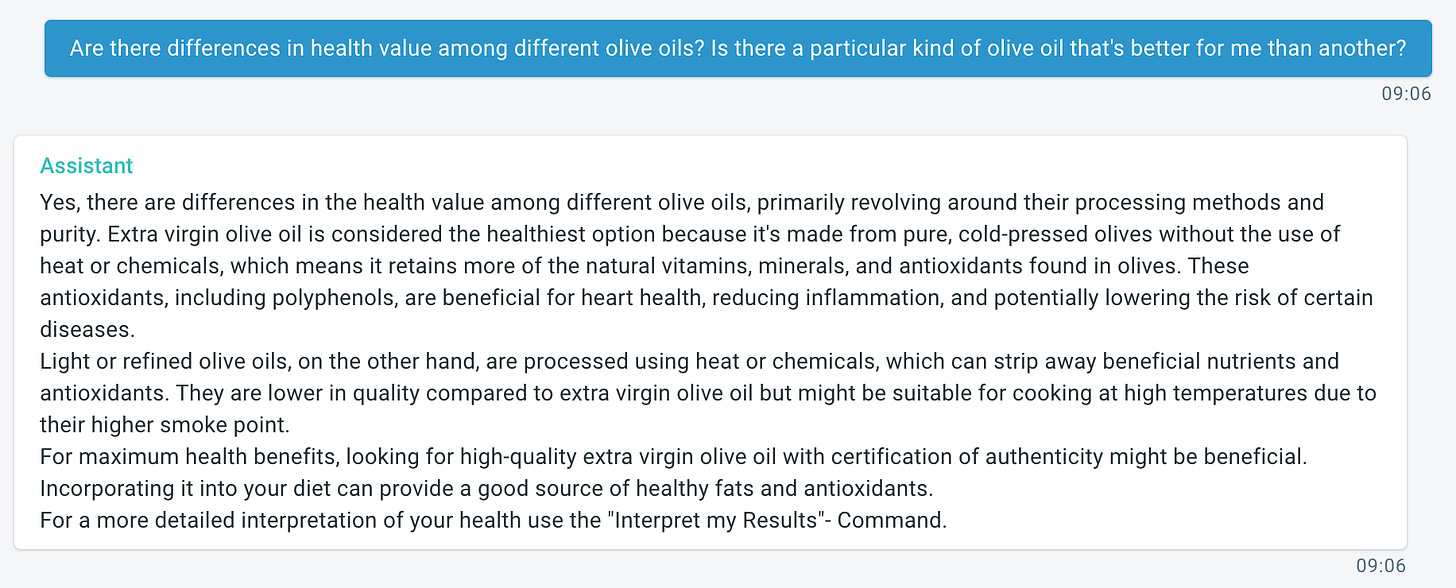

DrGupta.AI is a medical front-end to ChatGPT that specializes in medical advice. Enter your vital signs, including blood tests results, and ask away. Free for up to 12 messages/month, or $20 for unlimited. Dr.Gupta VET offers something similar for your pet.

If you’re a subscriber to ChatGPT, you can try the HouseGPT chatbot. It pretends to be one of those maverick doctors on the old TV series House M.D. Enter your patient details and it tries to offer a diagnosis, generally something less obvious than your typical doctor. Worth trying for tough cases. See Patrick Blumenthal’s post on X for an example.

Our friends at OpenCures have been running a GPT4-based AI Chat for a while. Create an account, upload some of your data, and ask whatever health questions you like.

If you want to research a specific condition, the AI-based search systems are getting very good.

Elicit continues to get better all the time. Since our first mention of them, Elicit became an independent company, raised funding, and launched a brand new version of the product with dozens of important new features including the ability to upload your own papers, import from Zotero, summarize the most important papers and more:

Here's a sample query "Does wearing an activity tracker improve health outcomes?"

What’s next?

Although the improvements keep coming, I’m beginning to wonder if we’ve already climbed the most important plateau we’ll get from the core power of these models. Think of Microsoft Word as an analogy. The first PC-based word processors were genuinely transformational for anyone who needs to write. Innumerable, important features have been added since that first version, but the basic concept and most use cases are still recognizable decades later

Rodney Brooks, a long-time practical AI scientist behind numerous robotic companies including Roomba, says LLMs have made him rethink some of his long-held assumptions about AI. ChatGPT seems to have conquered 99% of AI’s long-standing biggest challenges. But to a seasoned researcher, it’s that last 1% that poses the real problems. It’s not obvious that you can just add more data or better algorithms.

Like many of us, my first impressions of GPT-4 made me suspect that PS Week could be written entirely with AI: a bot could search for interesting articles on the internet and then summarize. Daily.AI kind of does that already, with reasonably good results.

But after using these models intensively every day, I’ve concluded that something’s still a little off. GPT-generated prose is quite good, and it’s a great companion to help understand complicated subjects. But AI-generated content is never quite what I, as a human, would write.

This is especially true in medical applications. Sure, ChatGPT can quickly spit out a diagnosis that seems very plausible. But in many (most?) situations, the diagnosis and treatment are the easy part. You already know what you need to do (eat healthily, get more exercise, minimize stress). The hard part is doing it, sticking to it, and modifying the treatment as things change.

As Baldur Bjarnason puts it in The LLMentalist Effect: how chat-based Large Language Models replicate the mechanisms of a psychic’s con:

The intelligence illusion is in the mind of the user and not in the LLM itself.

Links worth your time

Whoop Coach an OpenAI-based chatbot integrated with their popular fitness wearable. Ask it questions about HRV, specifics about your workout, and more. See this short description. They’ll even send you one to try for free for a month.

Guava Health is another in the long line of “dashboard” apps that help you consolidate all your various tracking devices to help find insights based on your health data. "Discover how air pressure affects your headaches" and other examples on their website. I haven’t used them, but it came recommended by somebody I trust.

Many bathroom scales, including Withings and Renpho, deliberately remember your last weigh-in so they can seem more accurate.

We’re waiting to get our copy of Susannah Fox’ new book Rebel Health: A Field Guide to the Patient-Led Revolution in Medical Care. Meanwhile read a short interview in Psychology Today in which she offers this suggestion for how to “hack” your healthcare.

A good place to start is what's called “personal science.” You can track what's important to you: a symptom, diet, sleep, or exercise routine. Start to make small changes and experiment with how to improve.

If you’re in the Seattle area on Wednesday March 13, please join me at Townhall Seattle for the author’s conversation with our friend, science writer Sally James. Susannah will be in San Francisco that week as well.

About Personal Science

AI and other technologies are moving so quickly that it’s hard to keep up. Personal Scientists like to stay on top of things, of course, but sometimes it’s better to take a longer, more skeptical view. What is the scientific method, really, and how does it apply to personal questions?

Several Personal Scientists meet weekly on Zoom, hosted by the 501(c) (3) non-profit Open Humans Foundation. The one hour meetup is open to anyone with an interest in personal science. A core group of half a dozen regular attendees (including me!) makes for a friendly, highly knowledgable group that is well worth attending if you have the slightest interest in this subject. Learn more at their Google Doc.

If you have ideas or other topics you think will benefit other Personal Scientists, please let us know.