Personal Science Week - 240201 Statistics

Self-reported studies, clinical trials, plus links about statistics

You’re hopeful about some treatment. It seems to make biological sense, and you know many intelligent people who claim good results. Then some well-done study seems to show it’s a failure. Does a good personal scientist give up?

This week we look some situations where you shouldn’t give up.

Personal scientists already know that professional scientists make mistakes. Just this week, the Harvard Medical School-associated Dana-Farber Cancer Institute had to retract 6 papers after a blogger noted serious problems with image manipulation.

We try to be skeptical about everything, including whether some of this “fraud” is intentional or not. (Paid subscribers already saw our too-frank discussion of the Claudine Gay scandal at Harvard).

But set aside deliberate fraud for now. How should we think about published research that doesn’t seem to conform to our own intuitions?

Clinical trials don’t necessarily settle it

So what about that big peer-reviewed study? Does it invalidate your own experience?

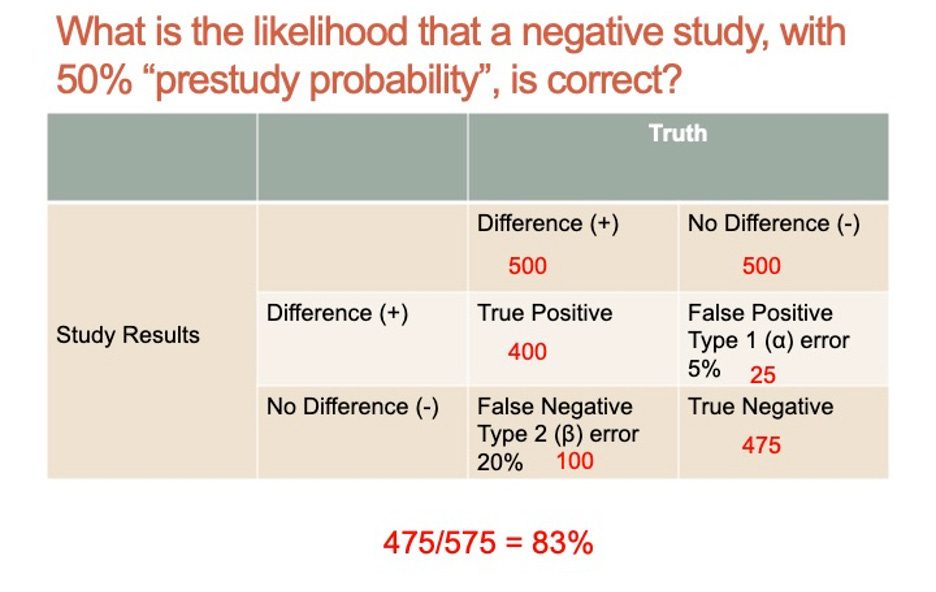

No, and Sensible Medicine’s Dr. Adam Cifu explains why Evidence-Based Medicine calls for more, not less, art, especially in cases like this. Skeptical scientists would rather be super-positive that a treatment works than super-negative that it doesn’t. Don’t give up on a promising idea just because it failed in one study. Here’s how Cifu summarizes the math:

One negative study on a promising idea should reduce your confidence, but not all the way.

If you think something is 50/50 correct before the study, then a negative result shouldn’t tip you all the way into rejecting it. Instead, you go from 50/50 to more like 17/83. In other words there’s a chance.

Self-Reported studies can be just fine

A common objection for some studies, especially those from personal scientists, is that they’re based on self-reports. Twitter @datepsych (who goes by the first name Alexander) wonders if studies based on self-reports are dismissed too quickly simply because they challenge pre-conceived notions. When we agree with the conclusions, we think self-reports are fine.

He uses the example of divorce. You’ve probably heard the statistic that “70% of women initiate divorces”, which sounds reasonable if you think a lot of men are nasty brutes. If you don’t, then maybe you’re tempted to criticize the self-reported way the data was collected.

So Alexander studied every possible source that can answer the question: Who initiates more divorces? You can read his excellent, chart- and table-filled summary for a demonstration of how our reaction to these studies is based on the assumptions we take going in. It’s not the reliability of self-reporting that matters.1

Think about why you care about the answer. The simple question “who initiates” is only simple if you ask it with a preconceived bias. Real phenomenon, including divorce, are super-complicated. We feel a rush of insight when we get a final number (70 percent!) only because it distracts us from digging into that complexity.

Instead, think more about your actual question. You may learn more by forming your question more precisely, and use the self-reported data as intended: a guide to the answer, not a conclusion.

In this case, maybe your actual question is about how a woman ends up married to the wrong person. Or maybe you’re wondering about personalities and whether that affects the results. The point is, ask the question that matters, not the one that conforms to your preconceived ideas.

If the conclusions make or don’t make sense, don’t blame the self-reports. Sometimes self-reports are literally all you have.

Links worth your time

Speaking of statistics, here are a few sources worth checking:

Anyone can use Excel or Google Sheets to do a very basic check of whether your simple hypothesis is worth exploring further. We showed a simple example (“does alcohol affect my sleep?”) in PS Week 230914.

Learn more about personal science statistics at the Open Humans Personal Science Wiki.

Columbia University Professor Andrew Gelman is the G.O.A.T. of statistics. His frequent blog posts are the first place I look for a professional take on any statistical issue in the news. Start with his Handy Statistical Lexicon that summarizes common tips and tricks.

Cartoonist Timo Elliott writes humorously about “criminal uses of statistics”

More for personal scientists to try

Our friends at Tastermonial are looking for personal scientists to try a new herbal supplement. They’ll give you 30 days of GlucoTrojan (the glucose-lowering powder we tried in PS Week 230615), a bunch of food vouchers, and two free before/after blood tests. Learn more and sign up at their pre-screening form.

Passing along an invitation from another effort hoping to make science more personal:

Seeds of Science would like to announce the SoS Research Collective, a first-of-its-kind virtual organization for supporting independent researchers (and academics thinking independently). In brief, we are offering researchers the following:

1) A title (SoS Research Fellow) and researcher profile page

2) Payment of $50 per article published in our peer-reviewed journal

3) Editing services and advising

4) Promotion of your work on the SoS Substack

See the full announcement post for more information on the structure and philosophy of the Collective.

Anyone conducting independent research is welcome to join (including academics doing some kind of work outside of their primary academic research). To apply, shoot us an email that tells us who you are and what your research is about—CV, website, blog, twitter, etc.—and we will go from there (info@theseedsofscience.org)

About Personal Science

Personal Science is about using the techniques of science for personal rather than professional reasons. This is a weekly update, delivered each Thursday, of ideas we think are useful to anyone striving to apply science to everyday life.

Please contact us if you have other topics of interest, ask informally in our chat, or leave a comment.

tldr; divorce is usually a mutual decision, with no clear side pushing or resisting.

I think you mean 17/83.

Methodology is extremely important, and there are many studies that haven't been published simply because the study authors didn't like the results. That in itself can skew the consensus of what reality is perceived to be. Two useful books in this area for me are "Doctoring Data" by Dr. Malcolm Kendrick, 2014, and "Death by Food Pyramid" by Denise Minger, 2013. Minger discusses the different types of studies, the nature of correlation coefficients, and other things in relatively simple terms.

One other thing I would relate is that it's impossible to be an expert on everything, and to even be knowledgeable in a single area takes time and effort. I try to focus my own time on a relatively limited number of subjects in the hope of achieving some level of genuine insight.